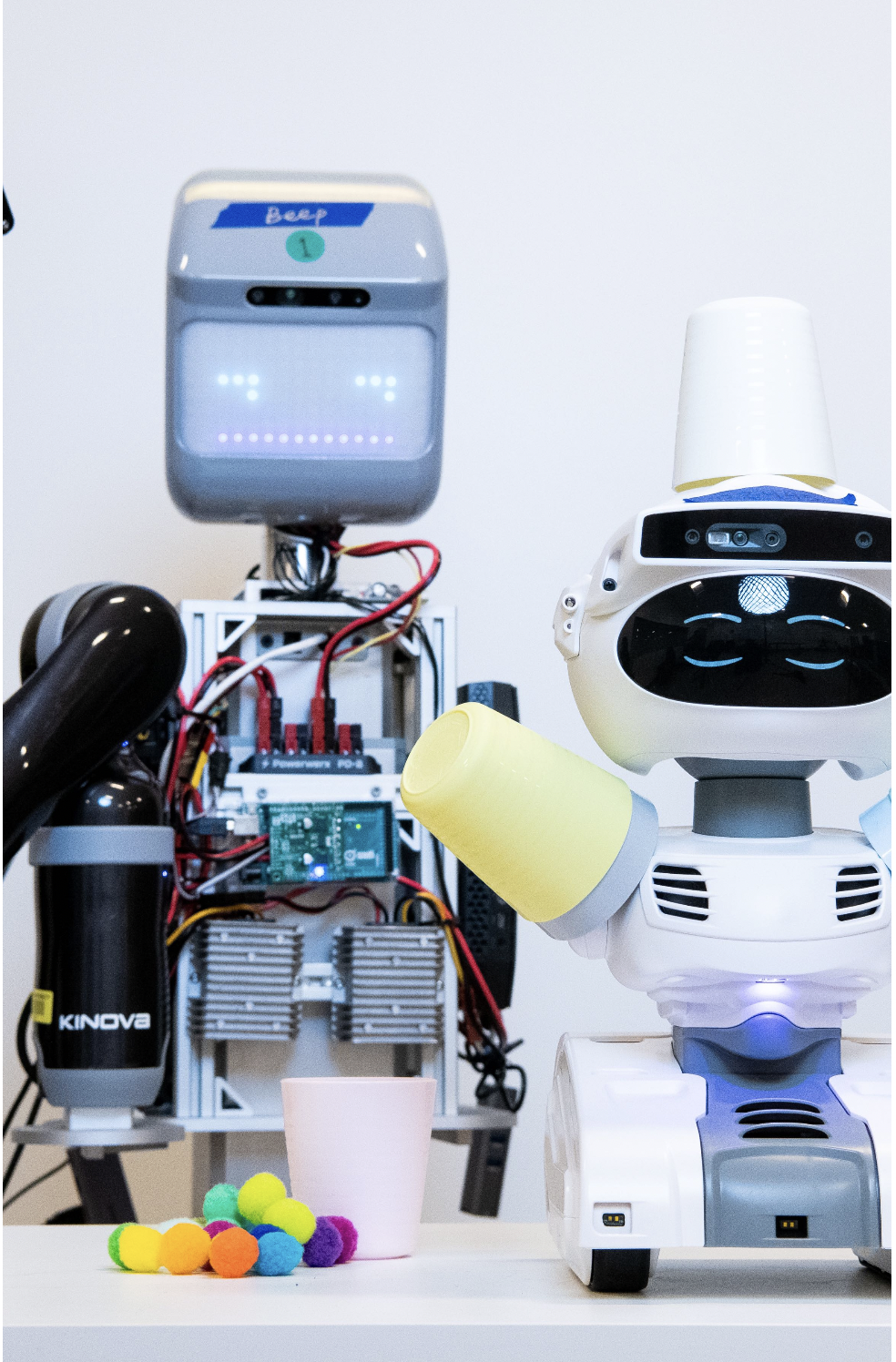

User-Empowering Robot Learning for Assistive Creative Tasks

Led by Dr. Elaine Short

This past summer, I conducted research in the Assistive Agent Behavior and Learning Lab, where I studied the sim-to-real gap in robotics’ simulations. I investigated how digitally replicated environments often fail to accurately mirror the behavior of real robotic systems and explored how human feedback can refine a robot’s simulations, ensuring its virtual performance more closely aligns with reality. I experimented with depth cameras, OpenCV, and 3D reconstruction libraries to scan real objects and import them into the Genesis simulator, documenting the challenges of mesh accuracy, missing depth detail, and the limitations of models that only handle rigid objects. Using Iterative Residual Tuning and TuneNet, I learned that previous researchers have approached this problem and identified gaps, particularly in deformable materials like clay and in human-centered evaluation.

Skills

Papers Read

3D-R2N2: A Unified Approach for Single and Multi-view 3D Object Reconstruction

Immersive Virtual Sculpting Experience with Elastoplastic Clay Simulation and Real-Time Haptic Feedback

Single image 3D object reconstruction based on deep learning: A review

CLAY: A Controllable Large-scale Generative Model for Creating

Holistic 3D Scene Parsing and Reconstruction from a Single RGB Image

Iterative residual tuning for system identification and sim-to-real robot learning

Further Research for Deformable Objects

The next steps for research would be to properly translate deformable objects into Sim2Real simulators. Although there is some progress in this area, there’s still a gap between reality and the simulations.

Next Steps

Future Work on Simulating Real World Environments

Another gap in research is the proper simulation of the environment in which the deformable objects exist. Measuring unpredictable variables and how gravity impacts clay and Play-Doh in Sim2Real has been a challenge in research.